AI ethics tennis match

At this event the attendees were split into two groups and the groups were assigned to argue for or against a list of AI related questions. Many times, you had to express opinions that spoke against your own values, which created an interesting dynamic in the discussions and brought both fascinating and some unexpected arguments.

It turned out to be a good way to explore or brainstorm around these questions. It also engaged the audience mush more than a regular presentation. So, enjoy this read and do not take it to seriously ;-)

Q: Should citizens be obliged to supply personal data for certain purposes, such as medical research? (data contribution as a moral imperative)

Yes: Today we have statistics over sickness but not so much about the healthy population. Of course, the state should be allowed to collect this vital information. Today we do not have enough information to improve disease prevention.

No: The data could be misused to deny citizens services like insurance, because you could see trends and make predictions about the individuals future health.

Yes: That is good, if someone is unfit to drive it is a good thing that we can prevent that person from driving before causing an accident.

No: Knowledge is power and if I give away my information, I won’t know who is controlling it. The information can be misused for other purposes than I intended to be used.

Yes: Today we have power structures where there are unclear what hidden motives might drive the people in power. If we get more data, we can reveal those motives so that people can make a more informed choice for who they vote for or who they should propose as the next vice president at their company.

Yes: The data misuse problem can be regulated away, we already have the GDPR and if we can create a system where we log every one that has had access to your data you can go in and decide on who might us it and for what. We should really focus this discussion on the objectives and what we can do with the data when we have a complete set from the entire population rather than just data about the sick people.

No: The problem here is that the question suggest that it should be enforced, and you need to have a lot of trust in a system that should manage all this data. If it gets out in the wrong hands you loose control over the information.

Yes: We pay taxes, why should we not provide data as a kind of tax to be a good citizen.

No: No, you can always switch tax regimes and not everyone is paying taxes. Another problem with supplying data is that you need a way to make sure the data is accurate. Opposed to tax money, data remains. So, you won’t be able to spend the data like you do with money.

Yes: We could set up more powerful legal regulations misuse of data. This would remove most of the opposition for the collecting of personal data.

No: Then we must define what is misuse of data. For instance, you might accept the use of certain data to track terrorists, but in the process, you are observing innocent people which would be considered misuse of data.

No: The right to be forgotten will then not apply anymore...

Q: Should ethical standards and rules support AI development and who should be making them?

Yes: Of course we need ethical standards. We can see what happened with the internet, it came to our homes and there were no regulations and now we are living with a generational gap in dealing with the public internet where people live on the dark net, it like opening pandoras box. We need standards and rules and ethical guidelines.

No: You say that the internet should have been controlled from the beginning. That goes against the idea of the world wide web we everyone can share everything. Which despite some bad sides has brought us many more good things that may not have been if the net was more regulated from the beginning.

Yes: The question state, rules that support AI. In this sense rules are good because they might help the AI make better decisions and avoid bad ones. Ethical guidelines can help in situations where the answer is not the most obvious one.

No: You can look at the question as adding additional standards on top of those you already have in society. Different societies have different regulations that developers follow. This means that the AI would need to follow more strict rules.

Yes: In machine learning we are building models and regarding the data used in these models there need to be standards to make sure that the results produced are reliable.

Yes: If we look at things from the worst-case scenario and we say that we do implement standards. Whether they are followed strictly or not. In a worst-case scenario you stifle invention on the flipside if you do not have any standards then it could cause a global tragedy. Then there is no way to know how costly it will become regarding human lives, environmental or economical.

No: I disagree that stifling innovation is not a bad thing. If you want to keep you work and be able to afford a vacation, then you need innovation. And if you believe that AI will greatly improve the world we should not put constraints on that.

Yes: God point, but I do not believe that not implementing any ethics will alleviate the problem. It won’t bring people out of poverty. If you look at the capitalist ideology, that's not lifting anyone out of poverty. Very centralized to a few people that are making the most gains, it’s not open to everyone. The same thing will happen to AI.

Yes: I would go one step further. How does AI solve tasks? Using a utility function and if you use a smarter AI it is going to design children that can solve the problem for it. So, you should not only apply ethical standards in development, but it should be embedded in the algorithms so that the child AI's inherit the ethical guidelines from the parent AI. If not, this is how we loos control. Two steps forward and the whole thing can go apocalyptic. For instance, we see that people engage more when it comes to rage online, so if you want the algorithm to optimize for maximum engagement. Then we have an internet that is full of hateful comments and that was never the intention. And when new AI's start creating subtasks then we have a nightmare.

No: You say that there are the humans that are doing the comments not the robots. So, what you are pointing out is actually us doing the negative thing. Not the Robots. Today we do not have ethical standards we have ethics. Where are we nowadays, do we have killing robots? No, we have self driving cars, intelligent decisionmakers, smart software system and intelligent agents and all this with no ethical standards.

Q: Should all application areas of AI be allowed and if not, which ones should be excluded?

Yes: Let's focus on the killer robots. Of course, some rouge group or evil country in the world will build advanced killer robots. So, we also must build killer robots. To defend our selves. That is why you should be allowed to do anything.

No: That just sets a bad precedence. Assuming that no one is following the rules. We must assume that people are following the rules. And if you can assume that then it is quite clear that killer robots should not be allowed.

Yes: Can you assume that from the history? Look at nuclear bombs for instance. I see your point, but I do not believe in it.

No: The problem with AI is not about killing people or not killing people. It's when you allow killing, the killing process should be transparent and the logic behind the decision for killing this person should be understandable. The problem with AI sometimes the decision-making is so complex, so it is almost impossible to know what the machine was picking on. And that is why killer robots should not exist, because we cannot track them. They are just applications.

Yes: We have the same problem with any military humans. You can train them and ask them to follow certain standards and rules, but you cannot predict all their actions in battle. So, your argument does not really apply.

No: Humans can be held accountable, systems cannot.

Yes: Why not, if the system has gone rouge it could be held accountable and in this case be eliminated or reprogrammed. It does not say that there should not be a security system for rouge AI systems.

No: Software can be easily copied and stolen, and if that happens to a rouge system who will be accountable for it's misuse. You can usually trace stolen guns, but software is a lot harder.

Yes: Have you heard about the selfish gene, we humans are very selfish, and we are going to kill each other anyway and AI is coming, and we should just embrace it with the good and the bad.

Yes: To follow up on the accountability point, for instance some people assumed that Irak had nuclear weapons and when it turned out to be false no one was held accountable for the war that broke out based on this belief.

Yes: Moving away from Armageddon, we are building systems without ethical rules today. There are self driving cars that still hit people. You can compare holding a human accountable with a failing computer and then deciding to scrap that machine and create a new one that is better. In this case you do not need ethics, you just need to build better AI's that do as we want them to do. The trial and error way.

No: Trial and error? How many people are going to die? This is our alpha AI, it might kill you. This is insane, we are talking about human lives here. And your argument is that humans have don this since before time. Yeah, but what makes technology so awsome is that it can scale. How much time does it take for you to kill a large number of people compared to a machine that can be in the entire world at once.

Q: Is artificial consciousness possible?

No: Let's start with what is consciousness. Since we do not understand it then it will be impossible to create it.

Yes: If we define it as a metalevel on top of the decision-making, then it is an as clear definition as you will ever be able to make. Then it is easy because you can make the regular AI and then on top of that a governing layer that checks so that everything is ok.

Yes: How did consciousness start in the first place? The fact that we are conscious and are made of biological components and our neurons align in a certain way that creates consciousness. And since many of us are conscious that means that this can be replicated. So, if you give AI enough time and processing power it will much like nature accidentally become conscious. And if you try to communicate with a gorilla, how do you prove if it is conscious or not? Same thing with a machine, but it will be harder.

No: But regarding the previous question if we limit the way we allow AI's to be developed. They will never be allowed to freely evolve in to conscious beings.

Yes: As stated in the previous question the argument was that nobody follows those rules anyway and then there is no limiting factor for a conscious AI to emerge. The only thing you can know for sure is real is your own consciousness.

Yes: Consciousness is an emergent property of our complex brain and we will obviously reach a state of similar complexity within the AI brain; thus, it is inevitable that it to will be conscious.

No: So, you are saying that human consciousness was predicated billion of years ago. Given chance human consciousness would arise. It might be a theory. But is it real?

Yes: We can look at animals and nature as well. Humans only have 20 senses, plants can have over 700 senses so there are arguments that plants are conscious. Because they can act to their surroundings. And we know that in the digital age we have computers that are many, many times faster than us in digital inputs so consciousness through emergence is inevitable.

No: We all know that we are biological, and our brain consists of biological matter with electrical, chemical and other signals like unknown energies from other dimensions like maybe a global consciousness they only consist of simple electrical circuits and though they might be able to simulate consciousness they will never be able to become conscious for real.

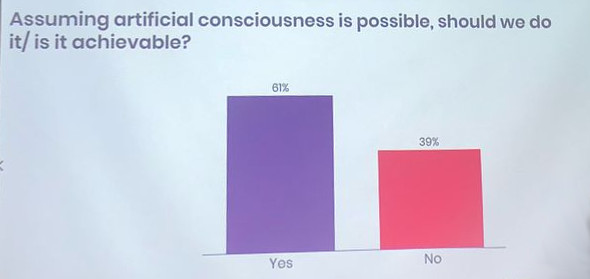

Image: The result of a poll in this group...

Q: Should AI agents appear robotic instead of trying to be as human as possible?

No: If you have an AI impersonating someone close to you, it would be nice to know that it is actually a robot and not the real person. The same goes for relationships. If you are dating someone it might be a bit frustrating to realize that it was an AI, you were talking to.

No: Another argument is the new google assistant can make calls on behalf of it's user and the owner might not realize that it is an AI. Google says that they can use this to call businesses and ask for opening hours or menu, so that they can publish it in their search. They might want to know that what they reply have the potential to be published on the internet for all to see before they engage in a conversation with it.

Yes: When using robots in a medical setting, it can be very comforting if it can be as human as possible.

Yes: Humans are very god at projecting emotions on to anything really. So, if AI's appear more human they will integrate easier into society. About the transparency if the get good enough we will not have AI but just I (Intelligence) to interact with.

Yes: I think it is inevitable that they will merge with humans and it goes really fast. It is more efficient that way. It is determined from the big bang actually. It's deterministic.

Yes: It does not have to look human, it could look like your favourite animal or a cartoon. It could even be an emoji. In that sense there is no real reason for it to be robotic, as stated in the question...

No: But that is not valid, because cosmetic surgery can push humans to look like anything in the future, a blue smurf, a unicorn or something. But it is still a human being and not an AI you are talking to.

Q: Should we grant human rights to conscious robots?

No: Of course not because then when we have an election for instance, the AI could just make a billion copies of it self and get the majority of the votes. And can then just decide who should be the next president.

Yes: Is voting a human right? Or there is something bigger on top of it and the voting is the reflection of a system the allows for the self determination of individuals, but the self-determination of individuals is the human right. Not the right to voting.

No: That is basically an issue if you are following democracy as a way for governance. If you look at most organisations that promote human rights, they are keen on having democracy as a way of governance. And that including equal rights to vote for everybody, not just a selection of the population. Hence a human right as the UN defines it today is the ability to vote.

Yes: We preach democracy, but it is not like we are democratising every single organisation. There is a hierarchy there is order and as many people state, the current way of democracy was not built for the scales that we currently have. Still democracy is the best system that we have come up with so far, the options are worse but that does not mean that democracy is the ultimate system. On the contrary. So, using AI we can come up with a system that is better and there is the other thought that if we build AIs that har more intelligent than us and we do not give them the right to vote, they might get angry at us...

Yes: Imagine that you have a conscious robot, that has been living with you for 50 years. It has been given responsibilities and contributed to society. Then, even if it is not necessarily human rights, it should have some set of machine rights that corresponds to our human rights.

No: Of course they should not have human rights because we do not believe they can be conscious and second if they get human rights, we give them the same legal rights as humans, this means that they get the right to reproduce and then we might soon end up on the extinct part of the scale. So, giving them human rights set us at a disadvantage due to the biological properties we have that robots do not have, like degeneration and so on.

Yes: Why don’t we let robots get the experience and learn from hundreds of people and then they will be smarter then us and we can give them the same rights as us or more rights even.

No: Because they are superior

Yes: To get human rights you must have a certain level of humanity. The only moment in which program human rights could be granted to a machine is if that machine shows to be human, meaning it would operate under the same limitations as us humans do. You got robot rights, if you want human rights then you must agree to operate within the limits of the human experience.

About degeneration, we are talking in the future here and maybe degeneration is not a human problem anymore.

- Ethics, AI, Machine Learning, Robots, Consciousness